MACHINE LEARNING DEPLOYMENT WITH TECHNIQUES AND BEST PRACTICES

April 10, 2023

Samantha Jones

Introduction to Machine Learning

Machine learning refers to the ability of a program to collect and use data following some validation parameters to improve task performance. It includes refining the quality of tasks while improving accuracy and reducing performance time. The back-end code and algorithms allow machine learning to trace, communicate and exchange information necessary for task completion.

Artificial intelligence uses machine learning, but all machine learning is not limited to AI. An Android or iOS mobile app developer needs to understand the role of platforms in deployment and the techniques to optimize the model performance for deployment. We will also discuss the common deployment strategies and best practices to add value to a machine learning deployment project.

PLATFORMS FOR MACHINE LEARNING DEPLOYMENT

Various deployment platforms provide a variety of features to deploy and manage machine learning models. It is essential to analyze the level of control to customize your environment. The pricing strategy of platforms differs, with some charging flexibly while others offer subscriptions or upfront payments.

Selecting the right platform depends upon the deployment needs and budget of the ML Engineers and the requests of an Android or iOS mobile app developer. Developers use machine learning to improve speech recognition, behavioral analysis, and predictive study. Here is a list of different platforms.

- SaturnCloud

- Anaconda

- Azure Machine Learning by Microsoft

- AWS by Amazon SageMaker

- Colab by Google Research

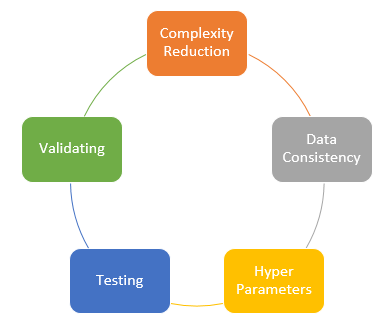

TECHNIQUES FOR PERFORMANCE OPTIMIZATION

After a suitable platform selection, ML engineers and data scientists must optimize the model for production and testing scenarios. The steps in optimization are as follows.

1. COMPLEXITY REDUCTION

The complexity of the models makes them slower and causes resource consumption to rise in deployment. Model architecture simplification enables improvements in performance while reducing the number of parameters helps reduce deployment time.

2. DATA CONSISTENCY

The consistency in data calls for removing exceptions and missing values from your data before using it. Make sure that the data is high quality and reliable, as it allows your model to perform consistently.

3. HYPERPARAMETERS

The hyperparameters hold values that control the learning behavior of a model. The number of neurons, activation function, optimizer, learning rate, etc., need tuning to help in reducing the deployment time and improve model performance.

4. TEST AND VALIDATE

Test and validate your model’s performance in a test environment that imitates production. It helps in the identification of issues and resolving them so deployment on production commences adequately.

DEPLOYMENT STRATEGIES

The selection of the deployment strategy is another essential task after optimizing the deployment model. It demands selection from the following strategies:

API BASED DEPLOYMENT

Applications requiring real time predictions use an API-based deployment strategy. It deploys the machine learning model as a web service and uses an API to access the model hence getting its name.

CONTAINER-BASED DEPLOYMENT

Large-scale deployments and on-premises applications use a container-based deployment strategy. Containerization bundles the machine learning model and its dependencies into light containers to deploy in different environments.

SERVERLESS DEPLOYMENT

Using the cloud functions on different platforms, machine learning models sometimes get deployed without using any servers. An android or iOS mobile app developer might use serverless deployment strategies as these are low-cost, flexible, and scalable.

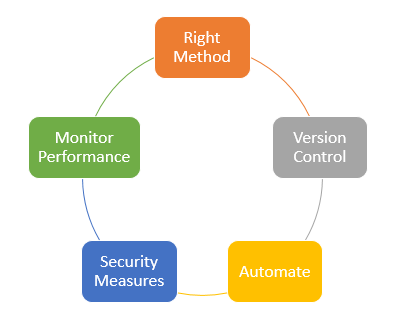

BEST PRACTICES FOR DEPLOYMENT

You may need to benchmark your deployment process according to industry standards. Using any strategy for deployment, following the best practices guarantees a fluent deployment. The following are the best practices.

1. SELECT THE RIGHT METHOD

The model selection for deployment doesn’t depend on the preference; rather, it depends on the needs and objectives of the deployment. Extensive and deep learning algorithms are heavier and diverse, so ML engineers and data scientists can use cloud-based deployment using Azure machine learning or Anaconda. In contrast, web developers use API-based deployments to create web services for prediction.

2. CONTAINERIZE ACCORDINGLY

Containers include the code, runtime, libraries, etc., to deploy anytime. It reduces the complexity of deployment and enables consistent deployment over different environments. An Android and iOS mobile app developer may use similar machine learning packages for different app development needs, so containerizing is best for them.

3. VERSION CONTROL

Tracking changes in code and models is difficult and frustrating. Maintaining versions helps maintain change records while enabling a data scientist or an Android and iOS mobile app developer to roll back to prior versions if necessary.

4. AUTOMATION

Deployment automation reduces the need to repeat the same steps every time. It also reduces errors occurring due to human mistakes. We can also schedule certain deployments for software that needs machine learning deployments regularly. It helps in speeding up the machine-learning pipeline.

5. SECURITY MEASURES

Like any other data set, machine learning models also face security threats. Best practices include encrypting the models and requesting authentication to secure valuable data.

6. MONITOR PERFORMANCE

Monitoring the performance of machine learning models is vital to identify and removing issues. Continuous monitoring enables timely resolution for improving the models’ accuracy and reliability. It requires tracking and analyzing the following aspects of a model.

- Prediction Accuracy

- Precision and Recall

- F1 Score

- Data Drift

- Latency and Throughput

CONCLUSION

Machine learning deployment is crucial to a machine learning development process. The worthiness and fluency of your models determine their accuracy and reliability. Performance optimization helps you in speeding up the process of deployment. The techniques for optimizing performance include complexity reduction, data consistency, hyperparameters, testing, and validating on clone instances.

Deployment strategies to select are API, Container, or cloud-based (Serverless), and you may select the one that fits your needs. At the same time, the best practices enable execution and monitoring for controlling the deployment models for machine learning. Lastly, the tools available for model performance monitoring ensure the reliability and accuracy of the model consistently.